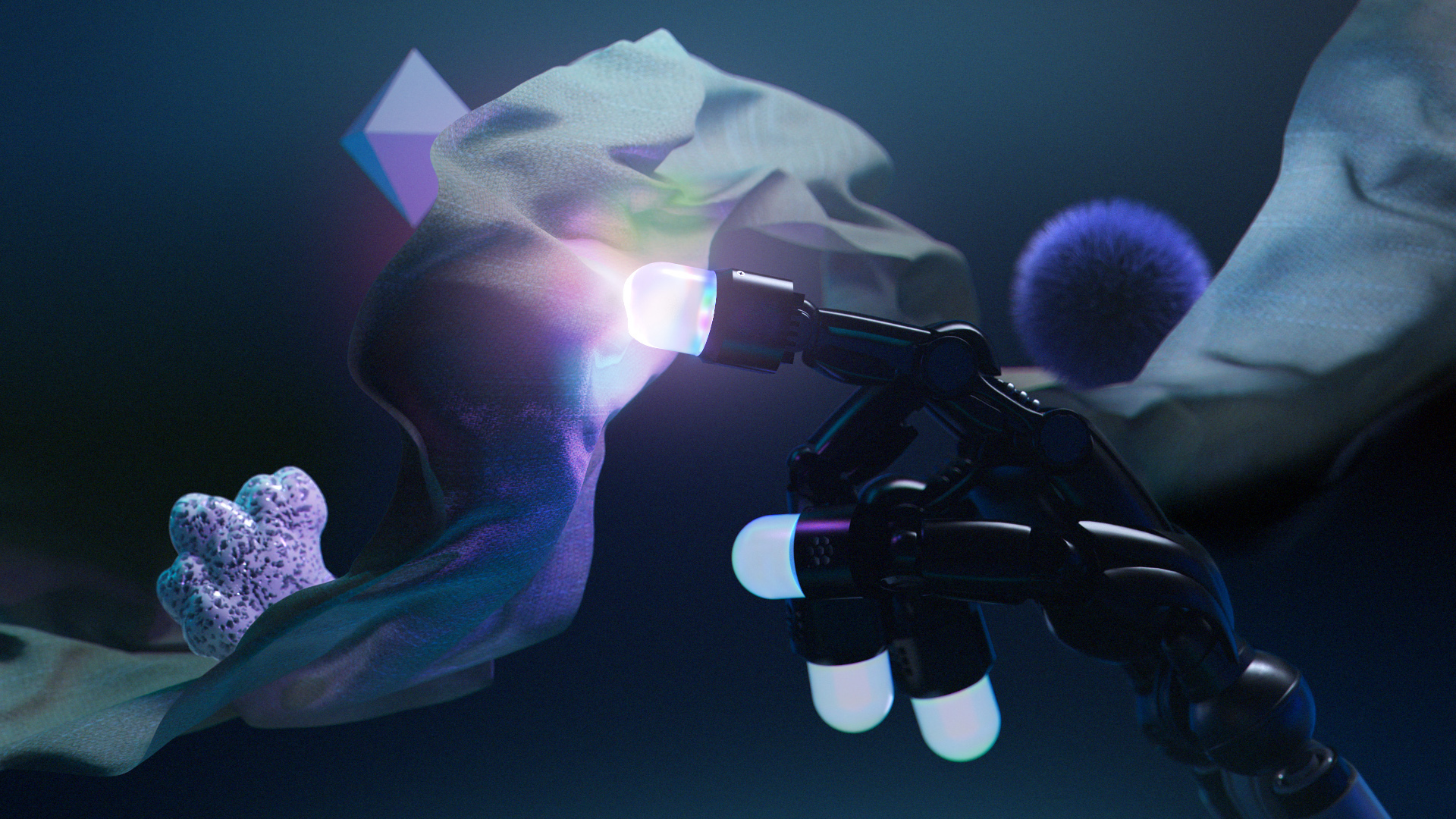

This October, Meta AI achieved breakthroughs in robotics and Human-Machine Interaction (HMI), advancing AI-driven precision and control for human-robot collaboration. Meta AI is committed to open-source advancement and has publicly shared both their research and supporting code. Explore this fascinating publication to discover its potential impact on the future of AI.

Today’s AI systems struggle with understanding and interacting with the physical world, a crucial ability for daily chores. To develop embodied AI agents, our Fundamental AI Research (FAIR) team is collaborating with robotics specialists. These agents ought to collaborate with people and be able to view and interact with their surroundings. Their assistance can benefit both the real and virtual worlds.. In our opinion, this represents a significant advancement in advanced machine intelligence (AMI).

Meta announced new research tools aimed at enhancing human-robot collaboration, robotic skills, and touch perception. The most significant way that people engage with the world is through touch. We’re excited to introduce Digit 360, a fingertip-shaped tactile sensor that delivers detailed, accurate touch data.

Digit 360

Introducing Digit 360, a finger-shaped tactile sensor that precisely digitizes touch to deliver rich, comprehensive tactile data.

To develop and market these innovations in touch sensing, we have partnered with Wonik Robotics and GelSight Inc. Next year, GelSight Inc. intends to produce and market Digit 360. Early access is available to researchers that submit through the Digit 360 proposal request. Our collaboration with Wonik Robotics seeks to use Meta Digit Plexus to develop a new high-tech robot hand with integrated touch sensing. Wonik Robotics will manufacture and distribute the next iteration of the Allegro Hand, set to debut next year. To receive updates on this release, interested scholars can complete a form.

To be useful, robots must consider social connections in addition to doing physical tasks. We developed the PARTNR benchmark to provide a common method for evaluating human-robot collaborative planning and reasoning. PARTNR enables large-scale, repeatable evaluations of embodied models, including planners based on large language models, across a variety of team circumstances. It considers limitations found in the real world, such as time and space. Our goal with PARTNR is to advance human-robot collaboration and interaction, transforming AI models from “agents” to true partners.”

Meta Sparsh

Meta AI is making the first general-purpose encoder for vision-based tactile sensing, Sparsh, available to the public.

There are several different types of vision-based tactile sensors, with variations in shape, illumination, and gel markings. Current methods use constructed models customized for a specific job and sensor. Scaling this approach is challenging because gathering labeled data, like forces and slip, is often very costly. In contrast, Sparsh doesn’t need labeled data. Instead, it uses recent advances in self-supervised learning (SSL) to work across many types of vision-based tactile sensors and tasks. Additionally, the team pre-trained these models on a large dataset of over 460,000 tactile images, improving Sparsh’s versatility and effectiveness.

For standardized tests across touch models, we offer a new benchmark consisting of six touch-centric tasks that range from comprehending tactile properties to facilitating physical perception and dexterous planning. We find that Sparsh outperforms task- and sensor-specific models by over 95% on average in this benchmark. By providing pre-trained backbones for tactile sensing, we intend to empower the community to scale and extend these models toward innovative applications in robotics, AI, and other domains.

Meta Digit 360: Fingertip Sensor with Tactile Sensitivity

As stated by Meta Ai,

We are excited to introduce Digit 360, an artificial finger-shaped tactile sensor that digitizes touch and provides rich tactile data for touch perception research. Scholars can combine different sensing methods or separate specific signals for in-depth research. With a better grasp of physical characteristics, human-object interaction, and touch mechanics, this technology helps researchers create AI. Digit 360 outperforms its predecessors in detecting minor spatial changes and sensing forces as small as 1 millinewton.

Using more than 8 million taxels, we created an optical touch perception system that records omnidirectional fingertip deformations. Based on the mechanical, geometrical, and chemical characteristics of the surface, each contact produces a distinct profile. The sensor has several modalities for detecting heat, vibrations, and smells to improve detection.

By transforming tactile experiences into digital data, this technique enhances AI-driven touch perception by enabling researchers to examine particular signals. Digit 360 can detect even the smallest changes in space and forces as little as 1 millinewton because to its sophisticated optical system that has 8 million taxels. Quick reactions to stimuli are made possible by its embedded AI accelerator, which processes impulses locally. By recording touch-based information beyond human perception, this method has a wide range of applications, from improving virtual reality to increasing the dexterity of robots and prosthetics. This results in more realistic interactions and improves AI’s comprehension of the real world.

Meta Digit Plexus: A unified platform for tactile-sensing hands

As stated by Meta AI,

In order to integrate touch sensors on a single robot hand, we present Meta Digit Plexus, a standard platform that provides a hardware-software solution. This platform links vision-based and skin-based tactile sensors to control boards, including Digit Digit 360 and ReSkin, which are located on the palm, fingers, and fingertips. A host computer receives all of the data encoded by these boards. With just one cable, the platform’s hardware components and software integration provide simple data gathering, control, and analysis.

By building this standard platform from the ground up, we can further research on robot dexterity and artificial intelligence. To facilitate the research of touch perception, we are now making the Meta Digit Plexus code and design Open source

Meta Digit Plexus is a unified hardware-software platform for integrating tactile sensors on a robotic hand by merging many sensor types throughout the palm and fingers. This standardized platform sends all sensor data to a host computer through a single connection, streamlining data collection and processing. Plexus enables accurate and adaptable touch interfaces, advancing the development of increasingly sensitive, human-like robotic systems.

Meta’s Dedication to the Advancement of Robotic Touch Perception

Advancing robotics and touch perception will transform the open-source community and create new opportunities in various sectors. We commit to providing open access to models, datasets, and software to drive the next generation of robotics AI research.We’re excited to provide this hardware to researchers in collaboration with Wonik Robotics and GelSight Inc. By working with the community, we take a step closer to a future where society benefits from artificial intelligence and robotics.